While disinformation activities and the volume of related verification work increase, the workflow for verifying digital content items and detecting disinformation remains complex, time-consuming and often requires specialists. The current generation of support tools lack functions for identifying the most recent forms of manipulated content and easily understanding disinformation patterns in social media. In addition, technologies used for content manipulation and disinformation have become more advanced, also involving synthetic media. There is a need for upgraded, easy-to-use tools with trusted AI functions, as more journalists and other researchers will be involved in content verification and disinformation detection tasks. In order to ensure acceptance of AI-based tools and the feasibility of their implementation, users need to judge aspects of trustworthiness with regard to machine-generated results and predictions, such as Explainability, Bias Mitigation, Robustness or Ethical Compliance.

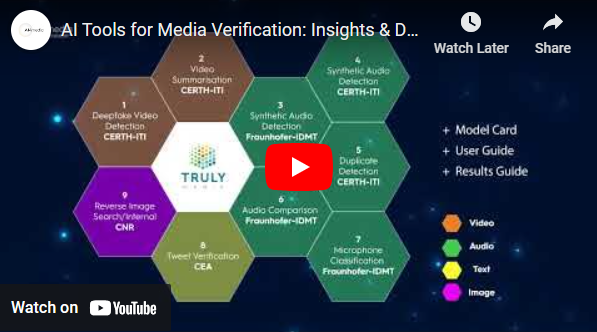

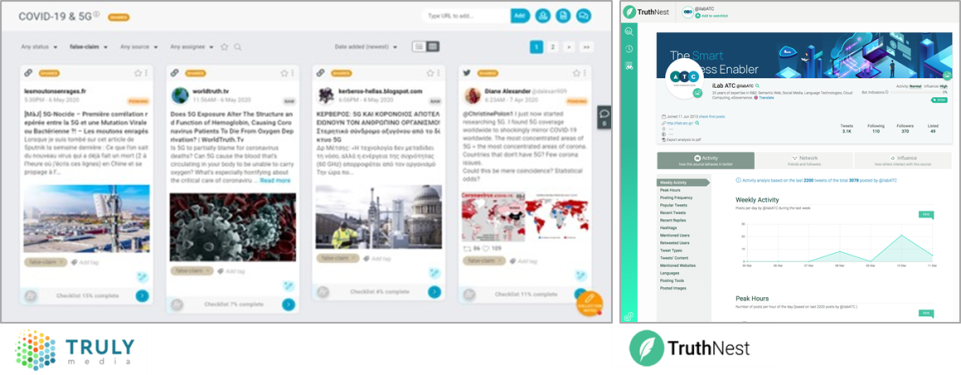

This use case from Deutsche Welle and ATC leverages AI technologies to improve support tools used by journalists and fact-checking experts for digital content verification and disinformation detection. New AI-based features will be made available within two existing journalism tools: Truly Media (a web-based platform for collaborative verification) and TruthNest (a Twitter analytics and bot detection tool).

Two topics are covered: 1) verification of content from social media with a focus on synthetic media detection, and 2) detection of communication narratives and patterns that are related to disinformation. Another aspect is the exploration of Trustworthy AI in relation to these topics and the specific needs of media organisations.

The detection and verification of synthetic media refers to both synthetically generated media items and synthetic elements within media items. This provides contextual information for the verification process, helping journalists and researchers to understand specific manipulation activities. The more advanced synthetic media becomes, the more difficult and time-consuming it will be to detect it. While there are useful and entertaining applications for synthetic media, it is also used for targeted, malicious disinformation. Synthetic media items misused for disinformation purposes are colloquially known as Deepfakes. All types of content can be synthetically generated (or manipulated): text, images, audio, video and elements within AR/VR experiences. Synthetic Characters can be either fictional or with high levels of likeness to a person, its voice and personality.

Another goal is to provide AI-based tools for better understanding communication patterns in social media that are related to disinformation. This includes the detection of topical disinformation campaigns or stories, which may entail false or distorted information. The latter can emerge dynamically, or be steered by certain social media actors and networked communities in order to achieve a specific objective. We address these challenges by collecting specific user needs in the area of content verification and disinformation detection that might be solved by advanced AI functionalities, including needs for associated Trustworthy AI features. Once respective AI tools have been developed in AI4Media, they will be tested against end user and business needs within Truly Media and TruthNest.

AI technologies for content verification and disinformation detection developed in AI4Media will be integrated in existing journalism tools like Truly Media and TruthNest.

Another goal is to provide AI-based tools for better understanding communication patterns in social media that are related to disinformation. This includes the detection of topical disinformation campaigns or stories, which may entail false or distorted information. The latter can emerge dynamically, or be steered by certain social media actors and networked communities in order to achieve a specific objective. We address these challenges by collecting specific user needs in the area of content verification and disinformation detection that might be solved by advanced AI functionalities, including needs for associated Trustworthy AI features. Once respective AI tools have been developed in AI4Media, they will be tested against end user and business needs within Truly Media and TruthNest.

Discover more about this topic on the whitepaper on AI Support Needed to Counteract Disinformation

Watch Video – Demo