Author: Lidia Dutkiewicz, Legal researcher (Center for IT & IP Law (CiTiP), KU Leuven)

During the past year, the European Commission (EC) proposed a comprehensive package of regulatory measures that address problems posed by the development and use of AI and digital platforms. These include the AI Package, the Digital Services Act and the Digital Markets Act, as well as the Data Governance Act and the forthcoming Data Act. In particular, the Artificial Intelligence Act (AI Act) presented by the EC in April 2021 represents a key milestone in defining the European approach to AI.

In a first of its kind legislative proposal, the EC aims to set a global standard of how to address the risks generated by specific uses of AI through a set of proportionate and flexible legal rules. The key question is how will these legislative proposals affect the use of AI in the media sector?

During the first year of the AI4Media project, one of the key milestones was to acheter sildenafil 100mg provide a clear overview of existing and upcoming EU policy and regulatory frameworks in the field of AI. In the last few years, there has been a variety of publications, guidelines, and political declarations from various EU institutions on AI. These documents provide valuable insight into the future of AI regulation in the EU. However, the large number of developments in the EU in the area of the “AI policy initiatives” makes it very difficult for AI providers and researchers to monitor the ongoing debates and understand the legal requirements applicable to them. The key challenge is to assess the possible implications of the proposed rules on AI applications in the media sector, i.e. for content moderation, advertising, and recommender systems.

Our analysis of the EU policy on AI envisages the impact of these EU initiatives for the AI4Media project in four distinctive areas. First, access to social media platforms’ data allows researchers to carry our public interest research into platforms’ takedown decisions, recommender systems, mis- and disinformation campaigns and so on. However, in recent years it has become increasingly difficult for researchers to access that data. That’s why there is a clear need for a legally binding data access framework that provides independent researchers with access to a range of different types of platform data. Recent regulatory initiatives, such as the Digital Services Act (DSA) try to address this problem.

Article 31 of the DSA proposal provides a specific provision on data access. However, it narrows access to platforms’ data to “vetted researchers”, namely university academics, which excludes a variety of different actors: journalists, educators, web developers, fact-checkers, digital forensics experts, and open-source investigators. Moreover, “vetted researchers” will be able to access platforms’ data only for purposes of research into “systemic risks”… The final scope of this provision will, undoubtedly, shape the way in which (vetted) researchers, journalists, and social science activists will be able to access platforms’ data. This is particularly relevant for the AI4Media activities such as opinion mining from social media platforms or detection of disinformation trends.

Second, it is extremely important to clarify the position of academic research within the AI Act. It is currently unclear whether the AI Act’s primary objective i.e. “to set harmonised rules for the development, placement on the market and use of AI systems” and its legal basis exclude non-commercial academic research from the scope of the Regulation or not.

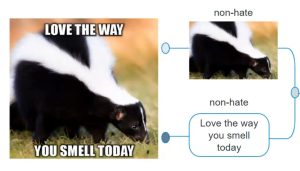

Third, the scope of the AI Act is unclear when it comes to its applicability to media applications. Importantly, certain practices such as the use of subliminal techniques or the use of the AI system which exploits the vulnerabilities of a specific group of persons, are prohibited. However, the current wording of these provisions makes it unclear whether and to which extent the online social media practices such as dark patterns fall within the scope of this prohibition. The AI Act also proposes certain transparency obligations applicable to AI systems intended to interact with natural persons, emotion recognition systems and deep fakes. However, the requirements lack precision on what should be communicated (the type of information), when (at which stage this should be revealed) and how. The important research questions which will be tackled in future AI4Media activities include:

- Does the ‘AI systems intended to interact with natural persons’ encompass recommender systems or robot journalism?

- Does ‘sentiment analysis’ and measuring and predicting user’s affective response to multimedia content distributed on social media with the use of physiological signals fall under the “emotion recognition” system?

Fourth, the use of AI to detect IP infringements and/or illegal content is one of the key legal and societal challenges in the field of AI and media. The key questions center around the role of ex-ante human review mechanisms before removing content and the potential violation of human rights, i.e. freedom of expression when legal content is being removed. The platform responsibility for third-party infringing and/or illegal content will be particularly relevant in AI4Media’s activity related to the “Integration with AI-On-Demand Platform”.

Considering the ever-changing legal landscape, the work performed in the analysis of the EU policy and regulatory frameworks in the field of AI is not a one-off exercise. Rather, the preliminary analysis done so far serves as a solid basis for the upcoming work in the later stage of the project, namely “Pilot Policy Recommendations for the use of AI in the Media Sector” and “Assessment of social/economic/political impact from future advances in media AI technology and applications”.

Stay tuned for more!