2.1. The AI Package

On 21 April 2021, the Commission published its AI package proposing new rules and actions aiming to turn Europe into the global hub for trustworthy AI. The AI package’s contents will be discussed in the following subsections:

2.1.1. Communication on Fostering a European Approach to Artificial Intelligence

The Communication explains that to be future-proof and innovation-friendly, the proposed legal framework is designed to intervene only where this is strictly needed and proposes a proportionate and risk-based European regulatory approach. The proposed AI regulatory framework will work in sync with already existing and proposed legislation such as the Digital Services Act and Digital Markets Act, as well as the European Democracy Action Plan.

2.1.2. Coordinated Plan on Artificial Intelligence 2021 Review

The Coordinated Plan puts forward a concrete set of joint actions for the EC and Member States on how to create EU global leadership on trustworthy AI, while putting forward a vision to accelerate investments in AI, spur the implementation of national AI strategies and align AI policy to remove fragmentation and address global challenges. Therefore, the Coordinated plan puts forward four key sets of proposals for the EU and Member States:

1. Set enabling conditions for AI’s development and uptake in the EU

Creating broad enabling conditions for AI technologies to succeed in the EU through acquiring, pooling and sharing policy insights, tapping into the potential of data and fostering critical computing infrastructure.

2. Make the EU the place where excellence thrives from the lab to the market

Ensure that the EU has a strong ecosystem of excellence including world-class foundational and application-oriented research and capabilities to bring innovations from the ‘lab to the market’. Testing and experimentation facilities (TEFs), European Digital Innovation Hubs (EDIHs) and the European ‘AI-on-demand’ platform will play a key role in facilitating a broad uptake and deployment of AI technologies.

3. Ensure that AI works for people and is a force for good in society

The EU has to ensure that the AI systems developed and put on the market in the EU are human-centric, secure, inclusive, and trustworthy. Therefore, the proposed actions focus on:

– Nurturing talent and improving the supply of skills necessary to enable a thriving AI ecosystem

– Developing the policy framework to ensure trust in AI systems

– Promoting the EU vision on sustainable and trustworthy AI in the world

4. Build strategic leadership in high-impact sectors

To align with the market developments and ongoing actions in Member States and to reinforce the EU’s position on the global scale, the plan puts forward joint actions in seven sectors. These sectors are environment, health, a strategy for robotics in the world of AI, public sector, transport, law enforcement, migration and asylum, and agriculture.

2.1.3. Proposal for a Regulation laying down harmonised rules on artificial intelligence (Artificial Intelligence Act)

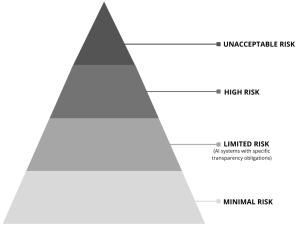

The proposed AI Act’s primary objective is to ensure the proper functioning of the internal market by setting harmonised rules in particular on the development, placing on the Union market, and use of products and services making use of AI technologies or provided as stand-alone AI systems. Therefore, the Act proposes a risk-based approach, differentiating between uses of AI that create (i) unacceptable risks (Title II); (ii) high risks (Title III); (iii) limited risks (Title IV); and (iv) minimal risks (Title IX).

Image adapted from the EU’s “Regulatory framework proposal on artificial intelligence“

Title I – Scope and Definitions

Provides definitions and defines the subject matter of the regulation.

Title II – Prohibited AI Practices

Article 5 comprises AI systems whose use is considered unacceptable as contravening Union values, such as human dignity, freedom, equality, democracy, the rule of law, and union fundamental rights prescribed in the EU Charter of Fundamental Rights. These practices include:

– Subliminal practices distort a person’s behaviour in a manner that causes or is likely to cause that person or another person physical or psychological harm.

– Practices and services that exploit the vulnerabilities of a specific group of people due to their age, physical, or mental disability.

– Social scoring by public authorities.

– Real-time biometric identification systems for the purposes of law enforcement.

Title III – High-risk AI systems

Title III governs AI systems which pose ‘high-risk’ to ‘health, safety and fundamental rights’ in certain defined applications, sectors and products. Title III applies to two main subcategories of AI systems: (i) the AI systems intended to be used as a safety component of a product, or is itself a product, already covered by the Union harmonisation legislation listed in Annex II (such as toys, machinery, lifts, or medical devices); (ii) stand-alone AI systems with mainly fundamental rights implications that are explicitly listed in Annex III.

Title IV- Transparency obligations for certain AI systems

Article 52 of the proposal sets transparency obligations which will apply for systems that (i) interact with humans, (ii) are used to detect emotions or determine association with (social) categories based on biometric data, or (iii) generate or manipulate content (‘deep fakes’).

Title V- Measures in support of innovation

In this Title, the EC aims to support innovation, research and smaller structures such as SMEs and start-ups. Article 53 and 54 provide information about the creation of sandboxes for AI established at the Member States level.

Art. 55 focuses specifically on measures supporting SMEs by imposing obligations on Member States to provide them priority access to AI regulatory sandboxes, develop dedicated communication channels and awareness activities and take into account their specific needs and interest when setting conformity assessment fees.

Other provisions of the proposal concern governance and implementation measures (Titles VI, VII, and VIII), encouraging and facilitating the creation of codes of conduct (Title IX), and final provisions including the penalty regime for non-compliance (Titles X, XI, and XII).

Authors:

Lidia Dutkiewicz, Emine Ozge Yildirim, Noémie Krack from KU Leuven

Lucile Sassatelli from Université Côte d’Azur

AI4Media may use cookies to store your login data, collect statistics to optimize the website’s functionality and to perform marketing actions based on your interests. You can personalize your cookies in .