Addressing challenges for the use of AI in media. What ways forward?

How to tackle the key challenges for the use of AI applications in the media sector for media companies, researchers and legal and social science scholars? The deliverable D2.4 “Policy Recommendations for the use of AI in Media Sector” is a result of the interdisciplinary research by legal, technical, and societal AI4Media experts, as well as an analysis of the 150 responses from AI researchers and media professionals which were collected as part of the AI4Media survey. It provides the initial policy recommendations to the EU policymakers, addressing these challenges.

There is an enormous potential for the use of AI at the different stages of media content production, distribution and re-use. AI is already used in various applications: from content gathering and fact-checking, through content distribution and content moderation practices, to audio-visual archives. However, the use of AI in media also brings considerable challenges for media companies and researchers and it poses societal, ethical and legal risks.

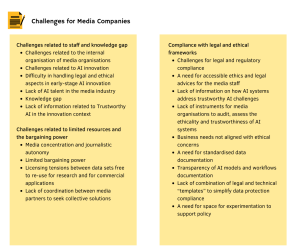

Media companies often struggle with staff and knowledge gap, the limited resources (e.g. limited budget for innovation activities) and the power imbalance vis-à-vis large technology companies and platform companies who act as providers of AI services, tools and infrastructure. Another set of challenges relates to legal and regulatory compliance. This includes the lack of clear and accessible ethics and legal advice for the media staff as well as the lack of guidance and standards to assess and audit the trustworthiness and ethicality of the AI used in media applications.

To overcome some of these challenges, the report provides the following initial recommendations to the EU policy-makers:

- Promoting of EU-level programs for training media professionals

- Promoting and funding the development of national or European clusters of media companies and AI research labs that will focus on specific topics of wider societal impact

- Promoting of initiatives such as Media Data Space, which would extend to pooling together AI solutions and applications in the media sector

- Fostering the development of regulatory sandboxes to support early-stage AI innovation

- Providing a practical guidance on how to implement ethical principles, such as AI HLEG Guidelines for Trustworthy AI in specific media-related use cases

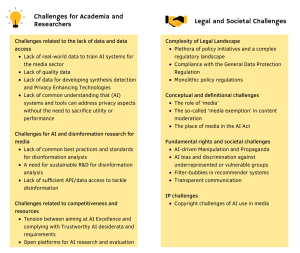

Researchers in AI and media are often faced with challenges predominantly related to data: the lack of real-world, quality, and GDPR-compliant data sets to develop AI research. Disinformation analysis within media companies suffers not only from restricted online platforms application programming interfaces (APIs) but also from the lack of common guidelines and standards as to which AI tools to use, how to interpret results and how to minimise confirmation bias in the content verification process.

To overcome some of these challenges, the report provides the following initial recommendations to the EU policy-makers:

- Supporting the development of publicly available datasets for AI research, cleared and GDPR-compliant (a go-to place for sharing quality AI datasets)

- Providing formal guidelines on AI and the GDPR which will address practical questions faced by the media sector such as on using and publishing datasets containing social media data

- Promoting the development of standards for the formation of bilateral agreements for data sharing between media/social media companies and AI researchers

There are also considerable legal and societal challenges for the use of AI applications in the media sector. Firstly, there is a complex legal landscape and plethora of initiatives that indirectly apply to media. However, there is the lack of certainty on whether and how various legislative and regulatory proposals, such as the AI Act, apply to the media sector. Moreover, societal and fundamental rights challenges relate to the possibility of the AI-driven manipulation and propaganda, AI bias and discrimination against underrepresented or vulnerable groups and the negative effects of the recommender systems and content moderation practices.

To overcome some of these challenges, the report provides the following initial recommendations to the EU policy-makers:

- Facilitating a process of establishing standardised processes to audit AI systems for bias/discrimination

- Providing a clear vision on the relationship between legacy (traditional) media and the very large online platforms in light of their “opinion power” over public discourse

- Clarifying the applicability of the AI Act proposal to media AI applications

- Ensuring the coherence of AI guidance between different standard setting organisations (CoE, UN, OECD,…)

Lastly, the report also reflects on the potential development of a European Digital Media Code of Conduct as a possible way to tackle the challenges related to the use of AI in media. It first maps the European initiatives which already establish codes of conduct for the use of AI in media. Then, the report proposes the alternatives to the European Digital Media Code of Conduct. It notes that instead of a high-level list of principles, the media companies need a practical, theme-by-theme guide to ethical compliance of real life use cases. Another possible solution could put more focus on certifications to ensure a fair use of AI in media.

Author: Lidia Dutkiewicz, Center for IT & IP Law (CiTiP), KU Leuven