1.2. Ethics and Trustworthy AI Initiatives

1.2.1. The Ethics Guidelines for Trustworthy Artificial Intelligence

On 8 of April 2019, the EU’s High-Level Expert Group (HLEG), a multi-stakeholder group of fifty-two experts, published the “Ethics Guidelines for Trustworthy AI”. The Guidelines are not legally binding. They do, however, pave the way for the future “AI regulation”. The stakeholders are, moreover, encouraged to voluntarily opt to use these Guidelines. In order to help operationalise the ethical requirements, the HLEG has also published the “Assessment List for Trustworthy Artificial Intelligence (ALTAI) for self-assessment” and an online tool. Another useful resource was developed in the context of the AI4EU project: An abbreviated assessment list to support the Responsible Development and Use of AI. It is a self-assessment tool and can support organisations to perform a ‘quick scan’ of the AI application they want to develop, procure, deploy, or use.

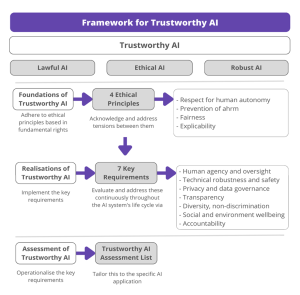

1.2.2. Framework for Trustworthy AI

The AI HLEG Guidelines are centred around the concept of “trustworthy AI”. Without AI systems being trustworthy, unwanted consequences may arise and prevent the realisation of the social and economic benefits of AI. According to the Guidelines, the three pillars of trustworthy AI are:

– Lawfulness: compliance with all applicable laws and regulations (international, European and national)

– Ethics: respect for ethical principles and values

– Robustness: both from a technical and social perspective (avoid any adverse impact)

Moreover, according to the HLEG, the foundations of Trustworthy AI are the fundamental rights enshrined in the EU Treaties, the EU Charter and international human rights law.

Image adapted from the EU’s High-Level Expert Group (HLEG), “Ethics Guidelines for Trustworthy AI”

1.2.3. 4 principles of trustworthy AI

The HLEG Guidelines propose the following four ethical principles (“imperatives”) “which must be respected in order to ensure that AI systems are developed, deployed and used in a trustworthy manner.

1. The principle of respect for human autonomy

It means ensuring that humans interacting with AI systems must be able to keep full and effective self-determination over themselves. Therefore, AI systems should follow human-centric design principles, augmenting, complementing, and empowering human cognitive, social, and cultural skills.

2. The principle of prevention of harm

AI systems should not cause or exacerbate harm to human beings, nor should they otherwise adversely affect human beings, this also includes the natural environment and all living beings. Developers need to ensure that AI does not infringe on human rights or cause “bodily injury or severe emotional distress to any person” by assessing their technologies’ safety, testing their algorithms to determine that no harm results from them, and implementing algorithmic accountability standards for any foreseeable negative impacts. Vulnerable persons should receive greater attention and be included in the development, deployment, and use of AI systems to avoid any information and power asymmetries.

3. The fairness principle

There are many different interpretations of fairness, but the HLEG guidelines set that to comply with this principle, AI design should be “fit for purposes, identity impacts on different aspects of society and should be designed to promote human welfare, rather than endanger it”. Two dimensions are attached to this principle:

– a substantive dimension containing:

➢ Ensuring the equal and just distribution of both benefits and costs

➢ Ensuring that individuals and groups are free from unfair bias, discrimination, and stigmatisation

➢ Ensuring equal opportunity in terms of access to education, goods, services, and technology. AI should be accessible to those that are often “socially disadvantaged”, such as disabled people

➢ Preventing deception of people or unjustifiable deterioration of their freedom of choice

➢ Respecting the principle of proportionality between means and ends

➢ Considering carefully how to balance competing interests and objectives

– a procedural dimension referring to a fair chance to receive information, respond, and dispute (challenge, contest, seek redress and remedies) for the affected party.

4. The principle of explicability

The AI HLEG sees explicability as a key principle for building and maintaining users’ trust in AI systems. What ‘explicability’ means varies among stakeholders active in AI and it is often considered as a controversial imperative as some argue that it’s not the process which requires explicability but rather the result of it. However, according to AI HLEG’s Guidelines, explicability encompasses both transparency and explainability. In HLEG’s own words “[explicability] means that processes need to be transparent, the capabilities and purpose of AI systems openly communicated, and decisions – to the extent possible – explainable to those directly and indirectly affected.” Nevertheless, in a black box society, explicability is not always possible but, in this case, other measures are deemed applicable such as traceability, auditability and transparent communication on system capabilities.

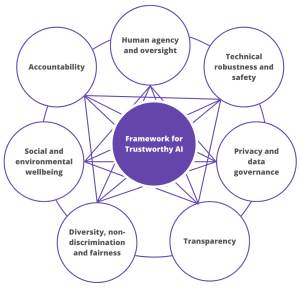

1.2.4. 7 Requirements of Trustworthy AI

Along with the principles in the HLEG Guidelines, the following 7 requirements are listed to achieve trustworthy AI.

Image adapted from the EU’s High-Level Expert Group (HLEG), “Ethics Guidelines for Trustworthy AI”

1. Human Agency and Oversight

AI systems shall act as enablers to a democratic, flourishing, and equitable society by supporting the user’s agency, upholding fundamental rights, and allowing for human oversight. Following mechanisms could be adopted to ensure compliance with this requirement:

– A fundamental rights impact assessment should be undertaken, prior to the development of such a system. Other mechanisms should also be put into place to receive external feedback regarding AI systems that potentially infringe on human rights.

– Human-in-the-loop (HITL), Human-on-the-loop (HOTL), and/or Human-in-command (HIC) mechanisms should be considered for the human oversight sub-requirement.

2. Technical Robustness and Safety

Robustness: AI systems should be developed with a preventative approach to risks, while reliably minimising unintentional and unexpected harm and preventing unacceptable harm.

Safety: AI systems should also have safeguards that enable a fall-back plan. It must be ensured that the system will do what it is supposed to do without harming humans or the environment.

In order to comply, the following mechanisms could be adopted:

– Ensuring to have user authentication in place to prevent risks such as access, modification, or disclosure of data.

– Using unique and pseudo-random identifiers, renewed regularly and cryptographically strong.

– Ensuring easy deactivation or removing the system and data once users are longer interested or need the system.

– Paying attention to whether the development is participatory and multidisciplinary.

3. Privacy and Data Governance

Privacy and data protection require that AI systems must guarantee privacy and data protection throughout a system’s entire lifecycle. This includes the information initially provided by the user, as well as the information generated about the user over the course of their interaction with the system (e.g., outputs that the AI system generated for specific users or how users responded to particular recommendations). Moreover, to allow individuals to trust the data gathering process, it must be ensured that data collected about them will not be used to unlawfully or unfairly discriminate against them. Therefore, the following mechanisms could be used to ensure compliance:

– Data collection is compliant with the General Data Protection Regulation (GDPR) and it respects the privacy of the individual.

– A Data Protection Impact Assessment (DPIA) has been carried out before the deployment of any system.

– Compliance with the data minimization principle, i.e. usage of local and temporary storage and encryption, based on principles of data protection by design.

– Ensuring that only strictly necessary data are captured and processed.

– Ensuring that the ownership of the resource (including code, data, use) is clear.

4. Transparency

This requirement is closely linked with the principle of explicability and encompasses transparency of elements relevant to an AI system: the data, the system, and the business models.

• Traceability

The data sets and the processes that yield the AI system’s decision, including those of data gathering and data labelling as well as the algorithms used, should be documented to the best possible standard to allow for traceability and increase in transparency, also applying to the decisions made by the AI system.

• Explainability

Technical explainability requires that the decisions made by an AI system can be understood and traced by human beings. Whenever an AI system has a significant impact on people’s lives, it should be possible to demand a suitable explanation of the AI system’s decision-making process. Such explanation should be timely and adapted to the expertise of the stakeholder concerned (e.g., layperson, regulator or researcher).

• Communication

AI systems should not represent themselves as humans to users; humans have the right to be informed that they are interacting with an AI system. This entails that AI systems must be identifiable as such. The following should be considered to comply with the requirement:

– Ensuring to include the right of users to be notified that their data is being processed/collected, access information on which personal data are collected, control their own data, access explanations of results produced by the system, and be informed of who, when, and how the system can be audited.

– Ensuring openness over data governance.

– The system should be covered by an explicit, clear, legal framework or sectorial formal policies, and these are addressed explicitly.

– Explicit information on the design process is available, including a clear description of aims and motivation, stakeholders, public consultation process and impact assessment.

5. Diversity, Non-discrimination and Fairness

The requirement’s inclusion and diversity components are closely linked with the principle of fairness, as the principle prescribes that the development, deployment and use of AI systems must be fair by ensuring equal and just distribution, safeguarding from unfair bias, providing equal access opportunities, respecting the principle of proportionality between means and ends, and balancing competing interests and objectives. This requirement, therefore, could be fulfilled by enabling inclusion and diversity through the entire life cycle of the AI system in the following ways:

– Consideration and involvement of all affected stakeholders in the entire process, considering the range of social and cultural viewpoints.

– Ensuring to provide analysis on the effect of the AI application on the rights to safety, health, non-discrimination, and freedom of association.

– Guarantee equal access through inclusive design, regardless of demographics, language, disability, digital literacy, and financial accessibility.

– Ensuring equal treatment.

6. Social and Environmental Wellbeing

The requirement of societal and environmental well-being is in line with the UN Sustainable Development Goals and pushes forward AI systems towards benefits to all human beings including future generations. Concerning the environmental well-being, the Guidelines encourage sustainability and ecological responsibility of AI systems. Through their whole life cycle, AI systems should be designed in the most environmentally friendly way possible. An entire range of aspects would need to be considered for instance: the type of energy used (renewal, fossil fuels), the cloud computing infrastructure, the energy consumption of the AI system, the components used, and the recycling aspects of the AI system’s component waste. Social impact of the AI systems should also be carefully assessed including the individuals’ physical and mental well-being as their exposure to AI systems covers all aspects of their lives and can mislead individuals. The following should be taken into account to comply with this requirement:

– Ensuring that users are informed and capable of using the system correctly.

– Ease of access to services without using the AI system. In the case of AI systems aimed to replace or complement public services, there should be full non-system alternatives.

– Explicit information on the design process should be available, including a clear description of aims and motivation, stakeholders, public consultation process and impact assessment.

7. Accountability

The requirement of accountability complements the above requirements, and is closely linked to the principle of fairness. It needs to put in place mechanisms to ensure responsibility and accountability for AI systems and their outcomes, both before and after their development, deployment and use. The following is recommended to comply with the requirement:

– The system should be covered by an explicit clear, legal, framework or sectorial formal policies, and these are explicitly addressed.

– Users should be able to contest decisions/actions or demand human intervention. These processes should be set up and clearly available.

1.2.5. EP Resolution 2020/2012(INL) on a Framework of Ethical Aspects of Artificial Intelligence, Robotics and related Technologies

In October 2020, the European Parliament (EP) issued the Resolution 2020/2012 (INL) on a Framework of Ethical Aspects of Artificial Intelligence, Robotics, and Related Technologies. This resolution highlights the need for a human-centric and a human-created AI approach and the need to establish a risk-based approach to regulating AI. The importance in AI4Media Context is that the Resolution acknowledges the growing potential of AI in the areas of information, media and online platforms, including as a tool to fight disinformation. However, if these areas are not regulated, it might have adverse effects by exploiting biases in data and algorithms that may lead to disseminating disinformation and creating information bubbles. Therefore, the Resolution calls for a common Union regulatory framework concerning AI, based on Union law and values and guided by the principles of transparency, explainability, fairness, accountability, and responsibility.

Authors:

Lidia Dutkiewicz, Emine Ozge Yildirim, Noémie Krack from KU Leuven

Lucile Sassatelli from Université Côte d’Azur

AI4Media may use cookies to store your login data, collect statistics to optimize the website’s functionality and to perform marketing actions based on your interests. You can personalize your cookies in .