As part of the AI4Media project, the national public-service broadcaster for the Flemish Community of Belgium (VRT), has been involved in integrating new AI applications into their workflows. This is a highly complicated process, where there is much to learn from good practices – here VRT shares a few of their insights.

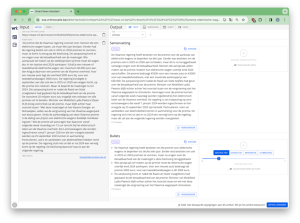

To facilitate better integration processes VRT has developed a stand-alone tool in which the possibilities and functionalities of a new AI application are made visible and tangible to the team involved. This enables key stakeholders, such as editors-in-chief to better assess the added value and make an informed decision whether to go ahead with the integration.

The tool is called Smart News Assistant, which is aimed at expressing the tool’s role in ‘assisting’ or ‘co-creating’. This is emphasised due to the importance for news professionals to be in control of the production process.

Starting from the content source

When developing this tool, the VRT’s news department expressed that it would be highly important to always start from existing content when assessing the capabilities of the potential AI solution, as this would ensure a sense of reliability and control.

Therefore, the tool allows the user to start from an existing piece of content, video, audio or text, and see how AI would enable the generation of new content formats, like for example a short video or Instagram post.

After making the possibilities of AI tangible in the Smart News Assistant, the editors-in-chief can assess the added value. If the editors see potential in the solution via this initial test, the innovation team of VRT will proceed to explore integration possibilities. This involves collaboration with the technology teams who are responsible for the surrounding systems that the AI application is to be integrated with.

Towards integrating automatic summarization

One of the potential applications that were made tangible with the Smart News Assistant was automated summarisation, where a news article was automatically turned into bullet points. This was seen as highly valuable for editors and is something that VRT is now working towards integrating. However, there are many challenges in this process, such as the integration into the existing CMS system.

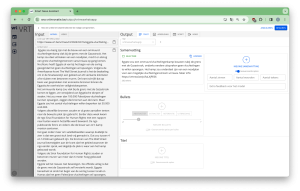

In this work, the team involved are focused not only on integrating the AI functionalities into the familiar news production flow but they are also taking into account emerging formats. VRT, for example, recently introduced WhatsApp updates where VRT NWS has its own channel – a format where the summarization tool might also be useful. By thinking beyond the existing news flow, they can work more efficiently in integration work and pre-empt other potential use cases.

However, this is where the Smart News Assistant is providing additional value, because while the integration process is ongoing, the editors can still use the AI tool as it is presented in the Smart News Assistant interface. So while it is not directly integrated into workflows, editors can copy-paste the textual suggestions manually into their CMS system from the interface, which places less stress on the integration team and enables immediate value from the AI solution for the editors – even if it requires a few extra clicks.

Screenshot Smart News Assistant (summary) – by Chaja Libot (VRT)

Screenshot Smart News Assistant (Whatsapp Update + fine-tuning result) – Chaja Libot

Author: Chaja Libot (Design Researcher, VRT)